The process of setting up Kubernetes worker nodes involves configuring key services such as kubelet, kube-proxy, and containerd, alongside installing essential software and packages. This configuration allows worker nodes to join the Kubernetes cluster, enabling them to run containerized workloads, manage network traffic, and ensure seamless communication between pods and services.

This task involves setting up two worker nodes for a Kubernetes cluster and configuring several critical services required for the cluster to function properly. Let's break down the main components and explain their importance:

Required Packages:

- socat: A utility that enables bidirectional data transfer between two independent data channels, which can be used for port forwarding and as a network tool in Kubernetes networking.

- conntrack: This is part of the Linux kernel that tracks the state of connections (especially for NAT) and is crucial for managing connections in Kubernetes networking.

- ipset: A tool that enables the efficient handling of large sets of IP addresses, essential for handling IP-based security and network routing rules within Kubernetes clusters.

Installed Software:

- crictl (v1.0.0-beta.0):

- Purpose:

crictlis a command-line interface for interacting with container runtimes. Kubernetes uses different container runtimes, andcrictlenables Kubernetes admins to inspect, debug, and manage containers via the Container Runtime Interface (CRI). - Relevance: Ensures that worker nodes can manage container operations (e.g., starting, stopping, querying containers).

- Purpose:

- runsc (latest):

- Purpose:

runscis the runtime used by Google’s gVisor, a container runtime focused on sandboxing container workloads for security. - Relevance: Adds additional security by isolating containers more securely, though it's optional and not always required.

- Purpose:

- runc (v1.0.0-rc5):

- Purpose:

runcis a low-level container runtime that creates and runs containers as per the Open Container Initiative (OCI) specification. It’s what most container runtimes use under the hood to interface with the operating system. - Relevance: It’s essential for creating containers on the worker nodes.

- Purpose:

- CNI plugins (v0.6.0):

- Purpose: CNI (Container Network Interface) plugins are responsible for setting up networking for containers. They enable containerized applications to communicate across the network (between pods and nodes).

- Relevance: Required for networking in Kubernetes, ensuring that pods can communicate internally (intra-cluster) and externally (out of the cluster).

- containerd (v1.1.0):

- Purpose:

containerdis a high-level container runtime that manages container lifecycles. It is a more complete container management solution, handling pulling images, starting, stopping, and managing containers. - Relevance:

containerdis the container runtime used by the worker nodes to manage the container lifecycle and is a core component of Kubernetes.

- Purpose:

- kubectl (v1.10.2):

- Purpose:

kubectlis the command-line interface for interacting with Kubernetes clusters. It allows administrators to manage and monitor cluster resources (e.g., deploy apps, view logs, get cluster status). - Relevance: Useful for testing the worker node's connectivity and integration with the cluster, though mainly used by cluster administrators.

- Purpose:

- kube-proxy (v1.10.2):

- Purpose:

kube-proxyis a network proxy that runs on each node in the cluster. It manages networking rules that allow communication between pods and services across the cluster. It directs traffic to the appropriate pod within the cluster. - Relevance: Essential for network traffic routing within the Kubernetes cluster, enabling worker nodes to direct traffic properly.

- Purpose:

- kubelet (v1.10.2):

- Purpose:

kubeletis the main agent running on worker nodes. It registers nodes with the Kubernetes control plane and ensures that containers are running in a pod by continuously monitoring the state of containers. - Relevance: The primary service responsible for managing the containers on the worker nodes. Without

kubelet, the worker nodes cannot join or operate in the cluster.

- Purpose:

Overview of Services:

- Kubelet Service:

- Registers the worker node with the Kubernetes control plane.

- Ensures that containers are running correctly in the assigned pods.

- Key to managing and maintaining the state of the nodes.

- Kube-proxy Service:

- Manages network rules on each worker node to ensure proper routing of traffic between services and pods across the cluster.

- Ensures inter-pod and service communication through IP address translation and load balancing.

- Containerd Service:

- Manages container execution and lifecycle.

- Pulls and manages container images.

- Let's first run the following command to pull all the necessary tools we will need to work on getting up the tree services:

wget -q --show-progress --https-only --timestamping \

https://github.com/kubernetes-incubator/cri-tools/releases/download/v1.0.0-beta.0/crictl-v1.0.0-beta.0-linux-amd64.tar.gz \

https://storage.googleapis.com/gvisor/releases/release/latest/x86_64/runsc \

https://github.com/opencontainers/runc/releases/download/v1.0.0-rc5/runc.amd64 \

https://github.com/containernetworking/plugins/releases/download/v0.6.0/cni-plugins-amd64-v0.6.0.tgz \

https://github.com/containerd/containerd/releases/download/v1.1.0/containerd-1.1.0.linux-amd64.tar.gz \

https://storage.googleapis.com/kubernetes-release/release/v1.10.2/bin/linux/amd64/kubectl \

https://storage.googleapis.com/kubernetes-release/release/v1.10.2/bin/linux/amd64/kube-proxy \

https://storage.googleapis.com/kubernetes-release/release/v1.10.2/bin/linux/amd64/kubelet &&

sudo mkdir -p /etc/cni/net.d /opt/cni/bin /var/lib/kubelet /var/lib/kube-proxy /var/lib/kubernetes /var/run/kubernetes &&

chmod +x kubectl kube-proxy kubelet runc.amd64 runsc &&

sudo mv runc.amd64 runc &&

sudo mv kubectl kube-proxy kubelet runc runsc /usr/local/bin/ &&

sudo tar -xvf crictl-v1.0.0-beta.0-linux-amd64.tar.gz -C /usr/local/bin/ &&

sudo tar -xvf cni-plugins-amd64-v0.6.0.tgz -C /opt/cni/bin/ &&

sudo tar -xvf containerd-1.1.0.linux-amd64.tar.gz -C /

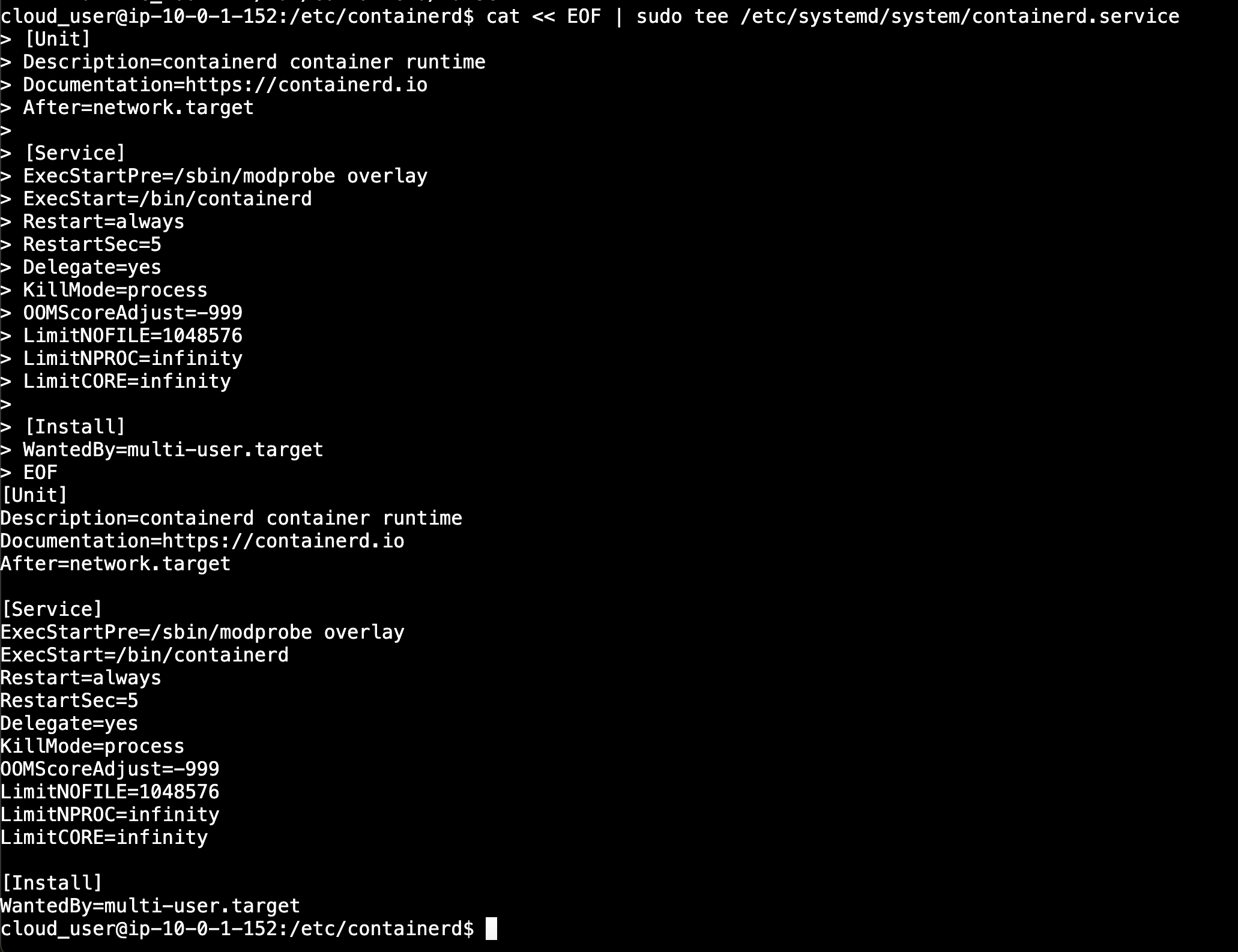

- Configure the containderd service:

sudo mkdir -p /etc/containerd/

We will also create the containerd unit file:

cat << EOF | sudo tee /etc/systemd/system/containerd.service

[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target

[Service]

ExecStartPre=/sbin/modprobe overlay

ExecStart=/bin/containerd

Restart=always

RestartSec=5

Delegate=yes

KillMode=process

OOMScoreAdjust=-999

LimitNOFILE=1048576

LimitNPROC=infinity

LimitCORE=infinity

[Install]

WantedBy=multi-user.target

EOF

- Execute the following command to create the

config.toml

cat << EOF | sudo tee /etc/containerd/config.toml

[plugins]

[plugins.cri.containerd]

snapshotter = "overlayfs"

[plugins.cri.containerd.default_runtime]

runtime_type = "io.containerd.runtime.v1.linux"

runtime_engine = "/usr/local/bin/runc"

runtime_root = ""

[plugins.cri.containerd.untrusted_workload_runtime]

runtime_type = "io.containerd.runtime.v1.linux"

runtime_engine = "/usr/local/bin/runsc"

runtime_root = "/run/containerd/runsc"

EOF- Depending on the host we are working on, whether it is worker 1 or worker 2 we will execute one of the following two block commands:

HOSTNAME=worker0.mylabserver.com

sudo mv ${HOSTNAME}-key.pem ${HOSTNAME}.pem /var/lib/kubelet/

sudo mv ${HOSTNAME}.kubeconfig /var/lib/kubelet/kubeconfig

sudo mv ca.pem /var/lib/kubernetes/

HOSTNAME=worker1.mylabserver.com

sudo mv ${HOSTNAME}-key.pem ${HOSTNAME}.pem /var/lib/kubelet/

sudo mv ${HOSTNAME}.kubeconfig /var/lib/kubelet/kubeconfig

sudo mv ca.pem /var/lib/kubernetes/

Create the Kublet-config.yaml file & its corresponding unit file:

cat << EOF | sudo tee /var/lib/kubelet/kubelet-config.yaml

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

enabled: true

x509:

clientCAFile: "/var/lib/kubernetes/ca.pem"

authorization:

mode: Webhook

clusterDomain: "cluster.local"

clusterDNS:

- "10.32.0.10"

runtimeRequestTimeout: "15m"

tlsCertFile: "/var/lib/kubelet/${HOSTNAME}.pem"

tlsPrivateKeyFile: "/var/lib/kubelet/${HOSTNAME}-key.pem"

EOFcat << EOF | sudo tee /etc/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=containerd.service

Requires=containerd.service

[Service]

ExecStart=/usr/local/bin/kubelet \\

--config=/var/lib/kubelet/kubelet-config.yaml \\

--container-runtime=remote \\

--container-runtime-endpoint=unix:///var/run/containerd/containerd.sock \\

--image-pull-progress-deadline=2m \\

--kubeconfig=/var/lib/kubelet/kubeconfig \\

--network-plugin=cni \\

--register-node=true \\

--v=2 \\

--hostname-override=${HOSTNAME} \\

--allow-privileged=true

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF- Next, we will configure the

kube-proxylike this:

sudo mv kube-proxy.kubeconfig /var/lib/kube-proxy/kubeconfigCreate the kube-proxy-config.yaml file:

cat << EOF | sudo tee /var/lib/kube-proxy/kube-proxy-config.yaml

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

clientConnection:

kubeconfig: "/var/lib/kube-proxy/kubeconfig"

mode: "iptables"

clusterCIDR: "10.200.0.0/16"

EOFCreate the kube-proxy unit file:

cat << EOF | sudo tee /etc/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-proxy \\

--config=/var/lib/kube-proxy/kube-proxy-config.yaml

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

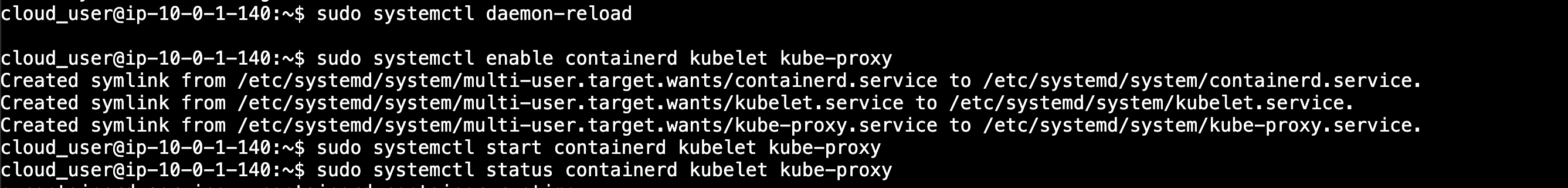

EOFNow we will reload all the services:

sudo systemctl daemon-reload

sudo systemctl enable containerd kubelet kube-proxy

sudo systemctl start containerd kubelet kube-proxy

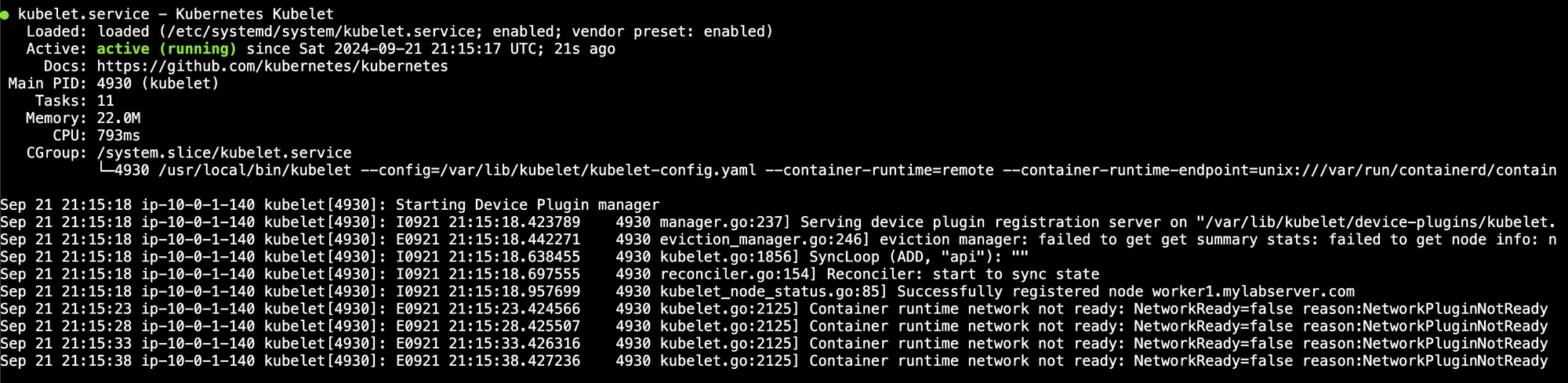

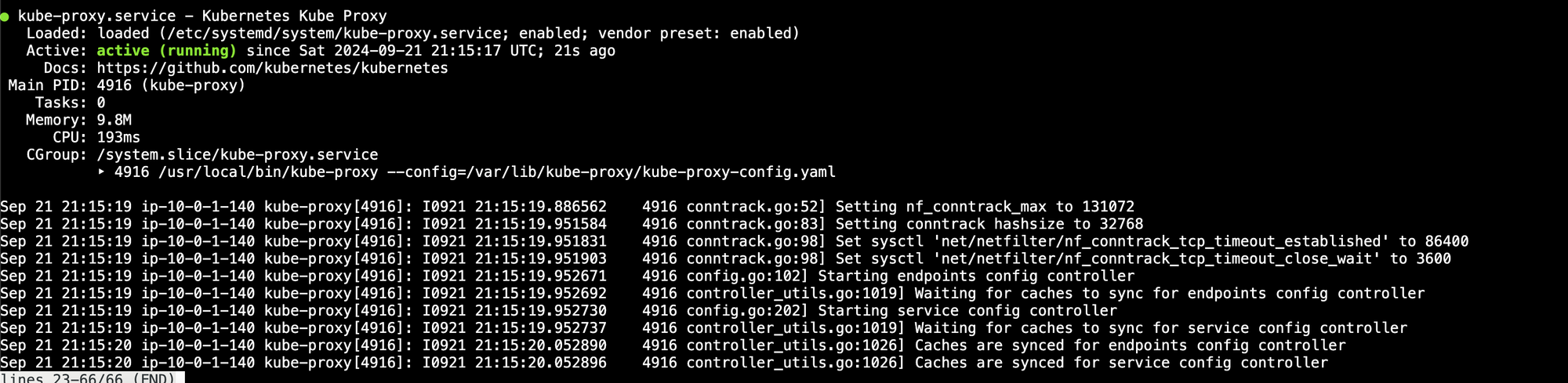

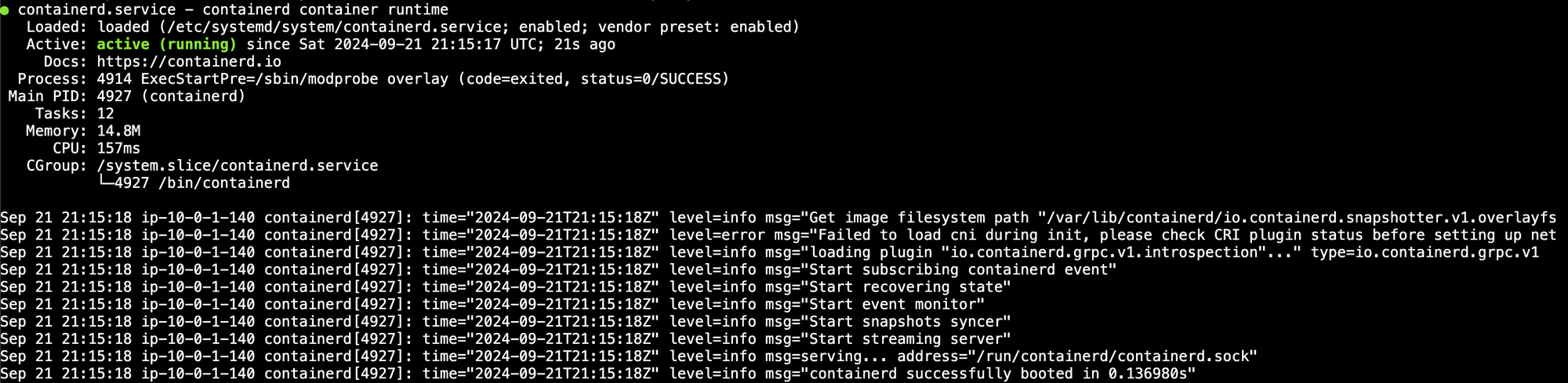

Lastly, let's verify the services are running properly with this command:

sudo systemctl status containerd kubelet kube-proxy

Now make sure that both nodes are registering with the cluster. Log in to the control node and run this command:

kubectl get nodes --kubeconfig /home/cloud_user/admin.kubeconfig

Make sure your two worker nodes appear. Note that they will likely not be in the READY state. For now, just make sure they both show up.

Final Step - Starting Devices:

Once the services are configured and the software is installed, you will start the worker nodes, which will:

- Register with the Kubernetes control plane (via kubelet).

- Join the cluster and be available to schedule workloads (containers/pods).

- Facilitate communication between pods and services (via kube-proxy and CNI plugins).

This configuration makes the worker nodes operational within the cluster, ensuring they can manage containerized applications and facilitate network communication across the Kubernetes ecosystem. By setting up and bootstrapping worker nodes, you expand the capacity of the Kubernetes cluster, ensuring efficient resource allocation, scalability, and high availability for containerized applications, while establishing a reliable foundation for running workloads in a distributed and resilient environment.