Context: Your team is working on setting up a new Kubernetes cluster. The necessary certificates and kubeconfigs have been provisioned, and the etcd cluster has been built. Two servers have been created that will become the Kubernetes controller nodes. You have been given the task of building out the Kubernetes control plane by installing and configuring the necessary Kubernetes services: kube-apiserver, kube-controller-manager, and kube-scheduler. You will also need to install kubectl.

Task List:

- Download and install the binaries.

- Configure the

kube-apiserverservice. - Configure the

kube-controller-managerservice. - Configure the

kube-schedulerservice. - Successfully start all the services.

- Enable HTTP health checks.

- Set up RBAC (Role-Based Access Control) for kubelet authorization.

Download and install the binaries

sudo mkdir -p /etc/kubernetes/config

wget -q --show-progress --https-only --timestamping \

"https://storage.googleapis.com/kubernetes-release/release/v1.10.2/bin/linux/amd64/kube-apiserver" \

"https://storage.googleapis.com/kubernetes-release/release/v1.10.2/bin/linux/amd64/kube-controller-manager" \

"https://storage.googleapis.com/kubernetes-release/release/v1.10.2/bin/linux/amd64/kube-scheduler" \

"https://storage.googleapis.com/kubernetes-release/release/v1.10.2/bin/linux/amd64/kubectl"

chmod +x kube-apiserver kube-controller-manager kube-scheduler kubectl

sudo mv kube-apiserver kube-controller-manager kube-scheduler kubectl /usr/local/bin/Explanation: Execution of this script configures the Kubernetes components by creating a directory to hold Kubernetes configuration files at /etc/kubernetes/config.

Following that, it uses wget to download the four crucial Kubernetes binaries (kube-apiserver, kube-controller-manager, kube-scheduler, and kubectl) from the designated version (v1.10.2). The script copies these binaries to /usr/local/bin/ and sets their executable permissions to chmod +x, allowing users to access them from anywhere on the system. These elements are necessary for Kubernetes cluster management, and the command-line tool for communicating with the cluster is called kubectl.

Configure the kube-apiserver service.

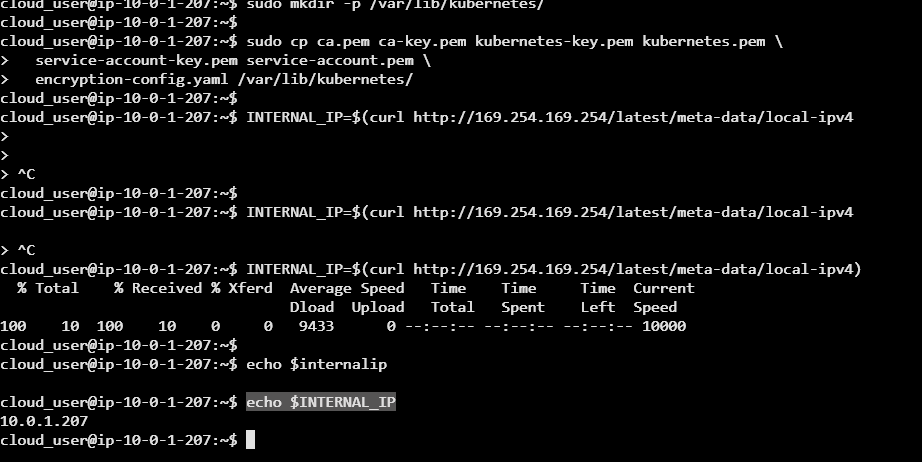

sudo mkdir -p /var/lib/kubernetes/

sudo cp ca.pem ca-key.pem kubernetes-key.pem kubernetes.pem \

service-account-key.pem service-account.pem \

encryption-config.yaml /var/lib/kubernetes/

INTERNAL_IP=$(curl http://169.254.169.254/latest/meta-data/local-ipv4)Explanation: Execution of this script prepares the necessary certificates and configuration files for the Kubernetes control plane. It first creates a directory at /var/lib/kubernetes/ to store Kubernetes data. Then, it copies several security-related files (ca.pem, ca-key.pem, kubernetes-key.pem, kubernetes.pem, service-account-key.pem, service-account.pem, and encryption-config.yaml) into this directory. Finally, it retrieves the machine's internal IP address (from the cloud provider metadata service) and stores it in the INTERNAL_IP variable. Which we will be utilizing later on.

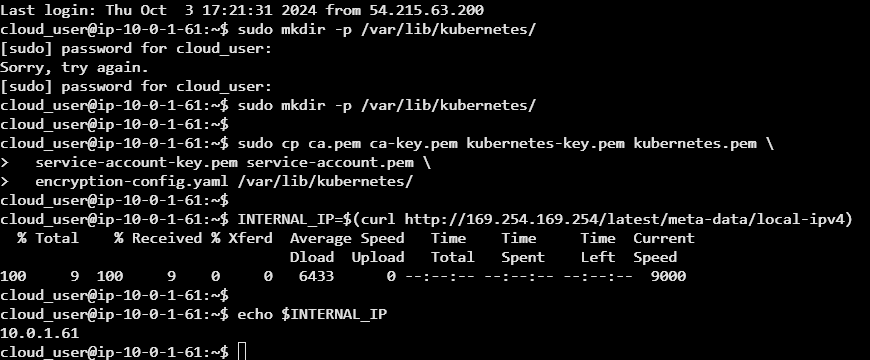

Next we will set more environmental variables on each of the worker nodes 0 and 1

cat << EOF | sudo tee /etc/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-apiserver \\

--advertise-address=${INTERNAL_IP} \\

--allow-privileged=true \\

--apiserver-count=3 \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-path=/var/log/audit.log \\

--authorization-mode=Node,RBAC \\

--bind-address=0.0.0.0 \\

--client-ca-file=/var/lib/kubernetes/ca.pem \\

--enable-admission-plugins=Initializers,NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \\

--enable-swagger-ui=true \\

--etcd-cafile=/var/lib/kubernetes/ca.pem \\

--etcd-certfile=/var/lib/kubernetes/kubernetes.pem \\

--etcd-keyfile=/var/lib/kubernetes/kubernetes-key.pem \\

--etcd-servers=https://${ETCD_SERVER_0}:2379,https://${ETCD_SERVER_1}:2379 \\

--event-ttl=1h \\

--experimental-encryption-provider-config=/var/lib/kubernetes/encryption-config.yaml \\

--kubelet-certificate-authority=/var/lib/kubernetes/ca.pem \\

--kubelet-client-certificate=/var/lib/kubernetes/kubernetes.pem \\

--kubelet-client-key=/var/lib/kubernetes/kubernetes-key.pem \\

--kubelet-https=true \\

--runtime-config=api/all \\

--service-account-key-file=/var/lib/kubernetes/service-account.pem \\

--service-cluster-ip-range=10.32.0.0/24 \\

--service-node-port-range=30000-32767 \\

--tls-cert-file=/var/lib/kubernetes/kubernetes.pem \\

--tls-private-key-file=/var/lib/kubernetes/kubernetes-key.pem \\

--v=2 \\

--kubelet-preferred-address-types=InternalIP,InternalDNS,Hostname,ExternalIP,ExternalDNS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOFWe must execute this on both worker nodes

Explanation: Execution of this script creates a systemd service file for the Kubernetes API server (kube-apiserver). It defines how the API server should be started with specific options (such as certificates, authorization modes, etc.) and ensures the service restarts automatically on failure, making it part of the system's multi-user startup process.

Configure the kube-controller-manager service

sudo mv kube-controller-manager.kubeconfig /var/lib/kubernetes/

cat << EOF | sudo tee /etc/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \\

--address=0.0.0.0 \\

--cluster-cidr=10.200.0.0/16 \\

--cluster-name=kubernetes \\

--cluster-signing-cert-file=/var/lib/kubernetes/ca.pem \\

--cluster-signing-key-file=/var/lib/kubernetes/ca-key.pem \\

--kubeconfig=/var/lib/kubernetes/kube-controller-manager.kubeconfig \\

--leader-elect=true \\

--root-ca-file=/var/lib/kubernetes/ca.pem \\

--service-account-private-key-file=/var/lib/kubernetes/service-account-key.pem \\

--service-cluster-ip-range=10.32.0.0/24 \\

--use-service-account-credentials=true \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOFExplanation: Execution of this script configures the Kubernetes Controller Manager as a systemd service. It first moves the Controller Manager's kubeconfig file to /var/lib/Kubernetes/. Then, it defines the systemd service file for kube-controller-manager, specifying how it should be started with various flags for cluster management, including the certificate and key files for signing, the service account credentials, and the network range for services. The service is set to automatically restart on failure and will be enabled for the system's multi-user target.

Configure the kube-scheduler service.

sudo mv kube-scheduler.kubeconfig /var/lib/kubernetes/

cat << EOF | sudo tee /etc/kubernetes/config/kube-scheduler.yaml

apiVersion: componentconfig/v1alpha1

kind: KubeSchedulerConfiguration

clientConnection:

kubeconfig: "/var/lib/kubernetes/kube-scheduler.kubeconfig"

leaderElection:

leaderElect: true

EOF

cat << EOF | sudo tee /etc/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-scheduler \\

--config=/etc/kubernetes/config/kube-scheduler.yaml \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOFExplanation: Execution of this script sets up the Kubernetes Scheduler as a systemd service. It first moves the Scheduler's kubeconfig file to /var/lib/kubernetes/. Then, it creates a Kubernetes Scheduler configuration file (kube-scheduler.yaml) in /etc/kubernetes/config/, specifying the kubeconfig file location and enabling leader election for high availability. Afterward, it creates the systemd service file for kube-scheduler, configuring it to use the newly created configuration file and specifying logging verbosity (--v=2). The service is set to restart on failure and will be enabled for the system's multi-user target.

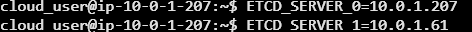

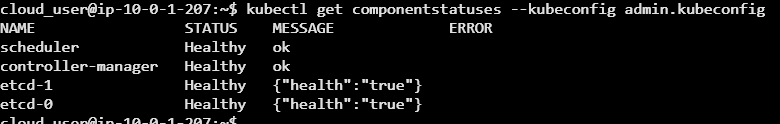

Successfully start all the services

Start the Kubernetes control plane services

sudo systemctl daemon-reload

sudo systemctl enable kube-apiserver kube-controller-manager kube-scheduler

sudo systemctl start kube-apiserver kube-controller-manager kube-schedulerWe will then verify everything is working as expected:

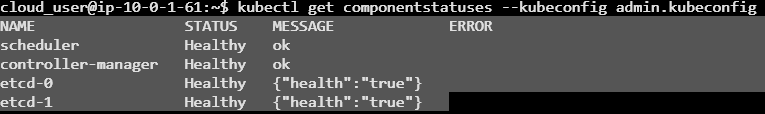

kubectl get componentstatuses --kubeconfig admin.kubeconfig

Enable HTTP health checks

sudo apt-get install -y nginx

cat > kubernetes.default.svc.cluster.local << EOF

server {

listen 80;

server_name kubernetes.default.svc.cluster.local;

location /healthz {

proxy_pass https://127.0.0.1:6443/healthz;

proxy_ssl_trusted_certificate /var/lib/kubernetes/ca.pem;

}

}

EOF

sudo mv kubernetes.default.svc.cluster.local \

/etc/nginx/sites-available/kubernetes.default.svc.cluster.local

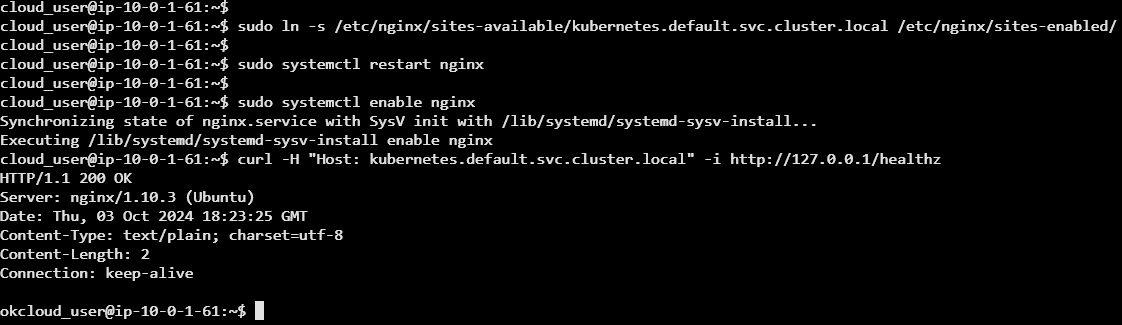

sudo ln -s /etc/nginx/sites-available/kubernetes.default.svc.cluster.local /etc/nginx/sites-enabled/

sudo systemctl restart nginx

sudo systemctl enable nginxExplanation: Execution of this script installs NGINX and configures it as a reverse proxy for the Kubernetes API server's health check endpoint. It first installs NGINX using apt-get.

Then, it creates an NGINX server block configuration file that listens on port 80 and proxies requests made to /healthz the Kubernetes API server at https://127.0.0.1:6443/healthz, using the specified trusted certificate (/var/lib/kubernetes/ca.pem). The file is moved to /etc/nginx/sites-available/, and a symbolic link is created in /etc/nginx/sites-enabled/ to enable the configuration. Finally, NGINX is restarted and enabled to start automatically on system boot.

Once the health check has been installed, we will verify we get a 200 ok to the health check.

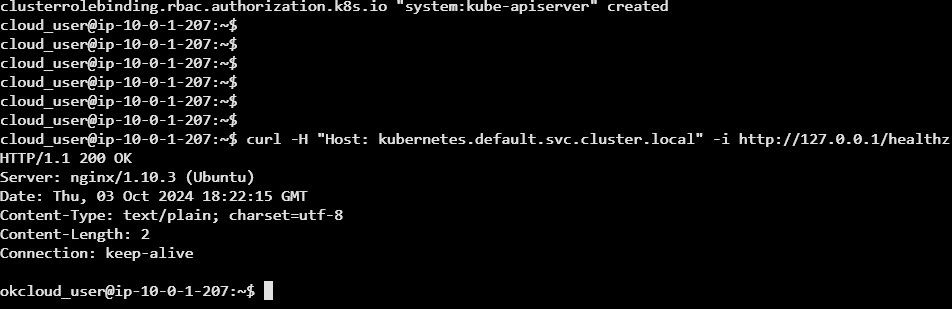

curl -H "Host: kubernetes.default.svc.cluster.local" -i http://127.0.0.1/healthz

Set up RBAC for kubelet authorization

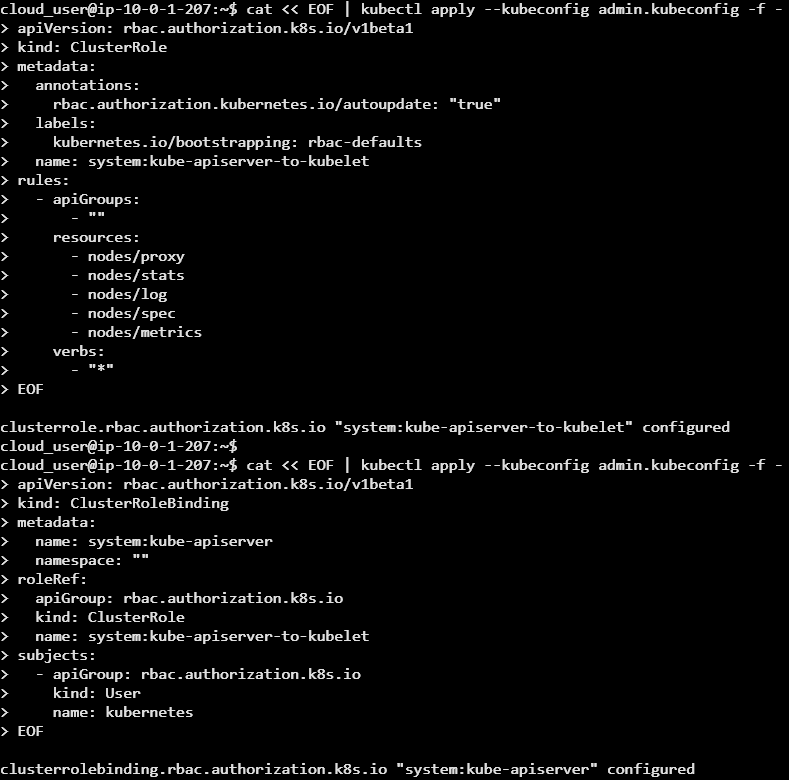

Explanation: Execution of this script configures Role-Based Access Control (RBAC) in Kubernetes to grant the API server permissions to interact with kubelets (the agents running on each node). The first command creates a ClusterRole called system:kube-apiserver-to-kubelet, which defines a set of permissions (or rules) allowing access to various node-level resources, such as node proxies, stats, logs, specs, and metrics, with all possible actions (verbs: "*") permitted.

The second command creates a ClusterRoleBinding called system:kube-apiserver, which binds the previously created ClusterRole to the kubernetes user, allowing the Kubernetes API server to interact with the kubelets using the permissions defined in the ClusterRole. Both actions are applied using kubectl with the provided admin.kubeconfig for authorization.

Conclusion:

Execution of the previous setup collectively configures key components of a Kubernetes cluster, including the API server, Controller Manager, Scheduler, and their respective services using systemd. They also establish NGINX as a reverse proxy for health checks and configure RBAC permissions for secure communication between the API server and kubelets. By automating these steps, the setup ensures a well-defined, secure, and high-availability Kubernetes control plane environment.